keras之模型回调

零.导入所需库

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

2.0.0-alpha0

sys.version_info(major=3, minor=6, micro=13, releaselevel='final', serial=0)

matplotlib 3.3.4

numpy 1.16.2

pandas 1.1.5

sklearn 0.24.1

tensorflow 2.0.0-alpha0

tensorflow.python.keras.api._v2.keras 2.2.4-tf

一.加载数据集,划分训练集,测试集,验证集

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)(5000, 28, 28) (5000,)

(55000, 28, 28) (55000,)

(10000, 28, 28) (10000,)

二.数据归一化

# x = (x - u) / std

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

# x_train: [None, 28, 28] -> [None, 784]

x_train_scaled = scaler.fit_transform(

x_train.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_valid_scaled = scaler.transform(

x_valid.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

x_test_scaled = scaler.transform(

x_test.astype(np.float32).reshape(-1, 1)).reshape(-1, 28, 28)

三.构建模型

# tf.keras.models.Sequential()

"""

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="relu"))

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.Dense(10, activation="softmax"))

"""

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation='relu'),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

# relu: y = max(0, x)

# softmax: 将向量变成概率分布. x = [x1, x2, x3],

# y = [e^x1/sum, e^x2/sum, e^x3/sum], sum = e^x1 + e^x2 + e^x3

# reason for sparse: y->index. y->one_hot->[]

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])四.训练及回调保存权重

import os,sys

log_dir= os.path.join('model-callbacks_logs') #win10下的bug,

if not os.path.exists(log_dir):

os.mkdir(log_dir)

tensorboard = tf.keras.callbacks.TensorBoard(log_dir = log_dir,histogram_freq=1)

EarlyStopping = keras.callbacks.EarlyStopping(patience=5, min_delta=1e-3)

save_model_name = './model-callbacks/model-callbacks.h5'

check_path = './model-callbacks/callbacks/cp-{epoch:04d}.ckpt'

save_dir = './model-callbacks/'

if not os.path.exists(save_dir):

os.mkdir(save_dir)

if not os.path.exists(save_dir + 'callbacks'):

os.mkdir(save_dir + 'callbacks')

# period 每隔5epoch保存一次

save_model_cb = tf.keras.callbacks.ModelCheckpoint(check_path, save_weights_only=True, verbose=1, period=5)

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks=[save_model_cb,tensorboard,EarlyStopping])

model.save(save_model_name)Train on 55000 samples, validate on 5000 samples

Epoch 1/10

55000/55000 [==============================] - 3s 60us/sample - loss: 0.8764 - accuracy: 0.7103 - val_loss: 0.6043 - val_accuracy: 0.7900

Epoch 2/10

55000/55000 [==============================] - 3s 54us/sample - loss: 0.5675 - accuracy: 0.8038 - val_loss: 0.5122 - val_accuracy: 0.8256

Epoch 3/10

55000/55000 [==============================] - 3s 56us/sample - loss: 0.5043 - accuracy: 0.8242 - val_loss: 0.4731 - val_accuracy: 0.8354

Epoch 4/10

55000/55000 [==============================] - 3s 55us/sample - loss: 0.4701 - accuracy: 0.8350 - val_loss: 0.4505 - val_accuracy: 0.8454

Epoch 5/10

54304/55000 [============================>.] - ETA: 0s - loss: 0.4476 - accuracy: 0.8424

Epoch 00005: saving model to ./model-callbacks/callbacks/cp-0005.ckpt

55000/55000 [==============================] - 3s 59us/sample - loss: 0.4476 - accuracy: 0.8421 - val_loss: 0.4343 - val_accuracy: 0.8530

Epoch 6/10

55000/55000 [==============================] - 3s 55us/sample - loss: 0.4307 - accuracy: 0.8478 - val_loss: 0.4214 - val_accuracy: 0.8538

Epoch 7/10

55000/55000 [==============================] - 3s 55us/sample - loss: 0.4169 - accuracy: 0.8529 - val_loss: 0.4101 - val_accuracy: 0.8618

Epoch 8/10

55000/55000 [==============================] - 3s 56us/sample - loss: 0.4059 - accuracy: 0.8583 - val_loss: 0.4022 - val_accuracy: 0.8620

Epoch 9/10

55000/55000 [==============================] - 3s 55us/sample - loss: 0.3964 - accuracy: 0.8599 - val_loss: 0.3971 - val_accuracy: 0.8616

Epoch 10/10

54592/55000 [============================>.] - ETA: 0s - loss: 0.3884 - accuracy: 0.8628

Epoch 00010: saving model to ./model-callbacks/callbacks/cp-0010.ckpt

55000/55000 [==============================] - 3s 58us/sample - loss: 0.3885 - accuracy: 0.8629 - val_loss: 0.3894 - val_accuracy: 0.8648

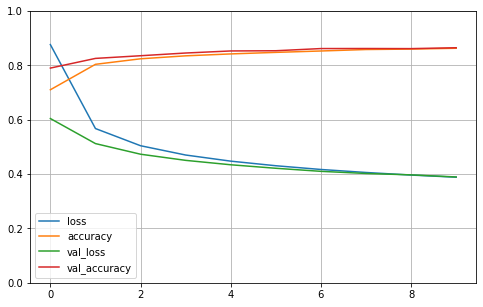

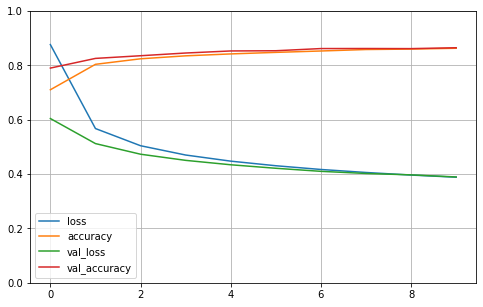

五.看下训练分布,验证分布

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

plot_learning_curves(history)

model.evaluate(x_test_scaled, y_test)10000/10000 [==============================] - 0s 28us/sample - loss: 0.4255 - accuracy: 0.8471

[0.4255402647733688, 0.8471]

test_loss, test_acc = model.evaluate(x_test_scaled, y_test)

print("准确率: %.4f,共测试了%d张图片 " % (test_acc, len(x_test)))10000/10000 [==============================] - 0s 31us/sample - loss: 0.4255 - accuracy: 0.8471

准确率: 0.8471,共测试了10000张图片

准确率84.71%

本文于 2021-04-28 14:51 由作者进行过修改