keras之分类模型

零.导入所需库

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)2.0.0-alpha0

sys.version_info(major=3, minor=6, micro=13, releaselevel='final', serial=0)

matplotlib 3.3.4

numpy 1.16.2

pandas 1.1.5

sklearn 0.24.1

tensorflow 2.0.0-alpha0

tensorflow.python.keras.api._v2.keras 2.2.4-tf

一.加载数据集,切分训练集,测试集和验证集,

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

# 查看维度

print(x_valid.shape, y_valid.shape)

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

# 第二种方案,建议采用

# import gzip

# # 定义加载数据的函数,data_folder为保存gz数据的文件夹,该文件夹下有4个文件

# # 'train-labels-idx1-ubyte.gz', 'train-images-idx3-ubyte.gz',

# # 't10k-labels-idx1-ubyte.gz', 't10k-images-idx3-ubyte.gz'

# def load_data(data_folder):

# files = [

# 'train-labels-idx1-ubyte.gz', 'train-images-idx3-ubyte.gz',

# 't10k-labels-idx1-ubyte.gz', 't10k-images-idx3-ubyte.gz'

# ]

# paths = []

# for fname in files:

# paths.append(os.path.join(data_folder,fname))

# with gzip.open(paths[0], 'rb') as lbpath:

# y_train = np.frombuffer(lbpath.read(), np.uint8, offset=8)

# with gzip.open(paths[1], 'rb') as imgpath:

# x_train = np.frombuffer(

# imgpath.read(), np.uint8, offset=16).reshape(len(y_train), 28, 28)

# with gzip.open(paths[2], 'rb') as lbpath:

# y_test = np.frombuffer(lbpath.read(), np.uint8, offset=8)

# with gzip.open(paths[3], 'rb') as imgpath:

# x_test = np.frombuffer(

# imgpath.read(), np.uint8, offset=16).reshape(len(y_test), 28, 28)

# return (x_train, y_train), (x_test, y_test)

# (x_train_all, y_train_all), (x_test, y_test) = load_data('../keras/fashion/')

# x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

# y_valid, y_train = y_train_all[:5000], y_train_all[5000:]

# print(x_valid.shape, x_train.shape, y_valid.shape, y_train.shape)(5000, 28, 28) (5000,)

(55000, 28, 28) (55000,)

(10000, 28, 28) (10000,)

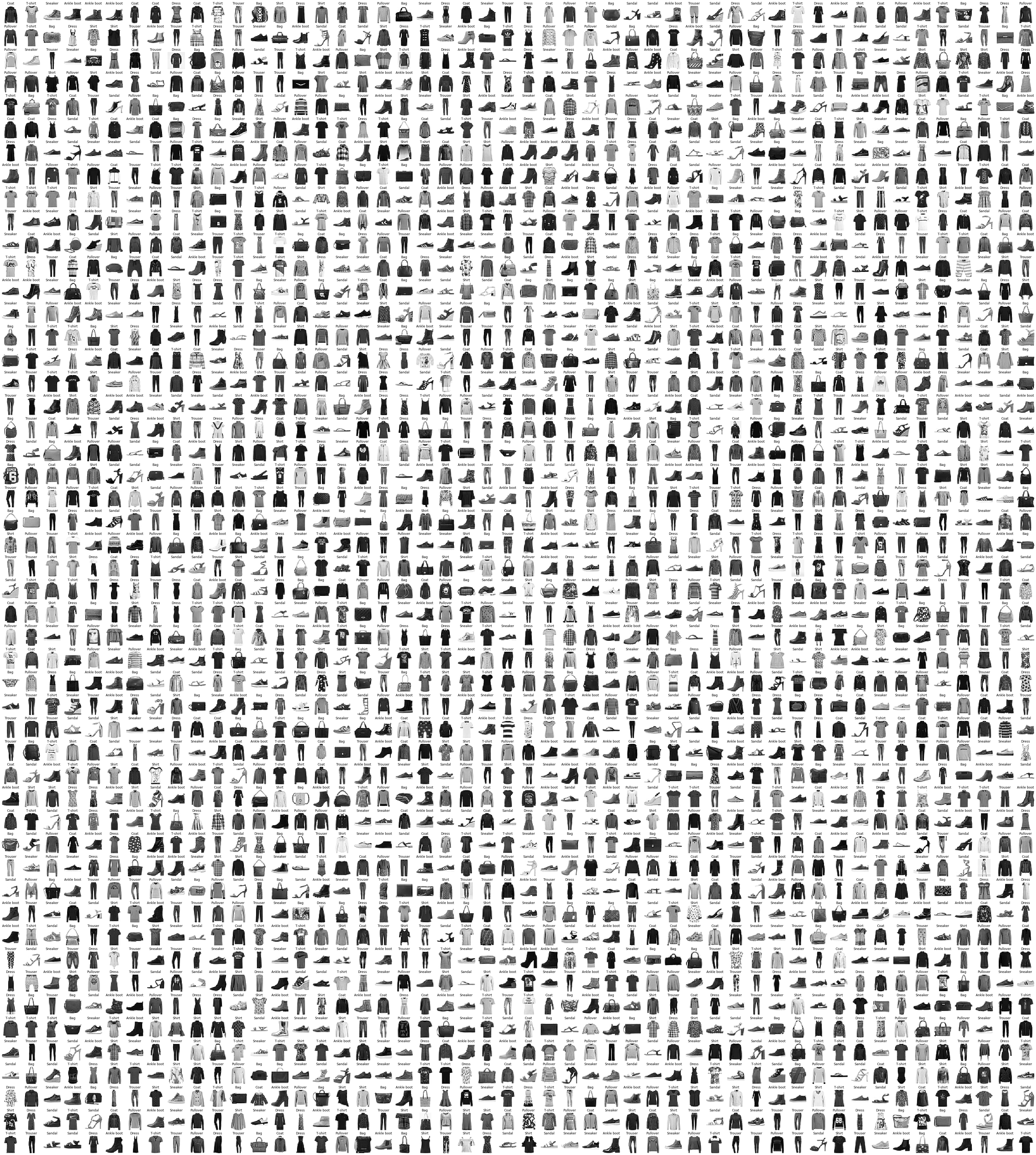

# 看效果图

def show_single_image(img_arr):

plt.imshow(img_arr, cmap="binary")

plt.show()

show_single_image(x_train[0])

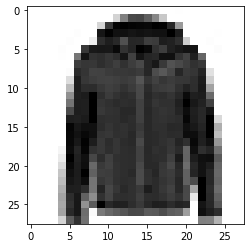

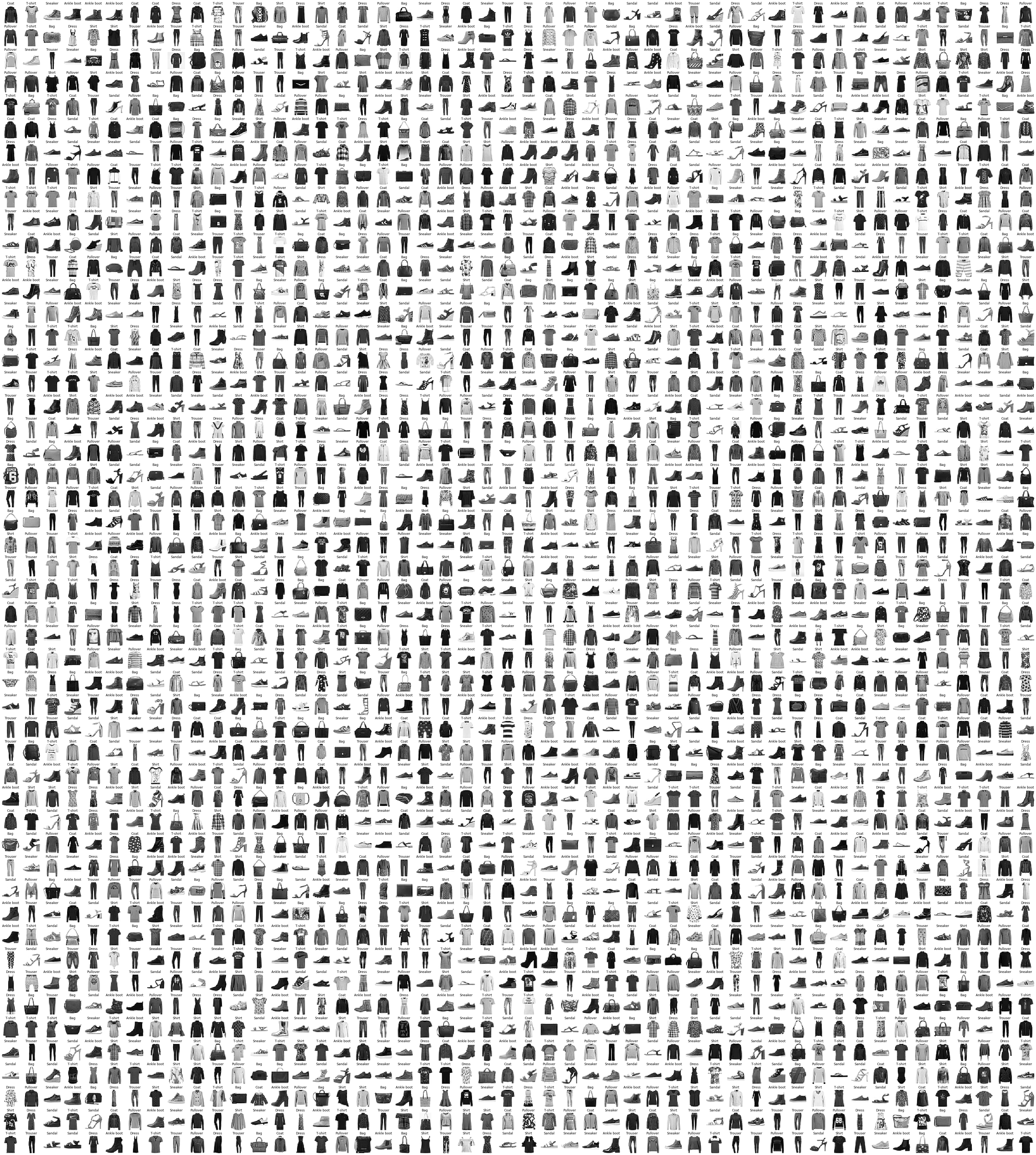

组成大图看下效果

def show_imgs(n_rows, n_cols, x_data, y_data, class_names):

assert len(x_data) == len(y_data)

assert n_rows * n_cols < len(x_data)

plt.figure(figsize = (n_cols * 1.4, n_rows * 1.6))

for row in range(n_rows):

for col in range(n_cols):

index = n_cols * row + col

plt.subplot(n_rows, n_cols, index+1)

plt.imshow(x_data[index], cmap="binary",

interpolation = 'nearest')

plt.axis('off')

plt.title(class_names[y_data[index]])

plt.show()

class_names = ['T-shirt', 'Trouser', 'Pullover', 'Dress',

'Coat', 'Sandal', 'Shirt', 'Sneaker',

'Bag', 'Ankle boot']

show_imgs(50, 50, x_train, y_train, class_names)

print(x_train.shape)(55000, 28, 28)

二.构建模型

# tf.keras.models.Sequential()

# 第一种方式

"""

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="relu"))

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.Dense(10, activation="softmax"))

"""

# 第二种方式

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation='relu'),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 300) 235500

_________________________________________________________________

dense_1 (Dense) (None, 100) 30100

_________________________________________________________________

dense_2 (Dense) (None, 10) 1010

=================================================================

Total params: 266,610

Trainable params: 266,610

Non-trainable params: 0

_________________________________________________________________

# relu: y = max(0, x)

# softmax: 将向量变成概率分布. x = [x1, x2, x3],

# y = [e^x1/sum, e^x2/sum, e^x3/sum], sum = e^x1 + e^x2 + e^x3

# reason for sparse: y->index. y->one_hot->[]

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])model.layers[,

,

,

]

model.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 784) 0

_________________________________________________________________

dense (Dense) (None, 300) 235500

_________________________________________________________________

dense_1 (Dense) (None, 100) 30100

_________________________________________________________________

dense_2 (Dense) (None, 10) 1010

=================================================================

Total params: 266,610

Trainable params: 266,610

Non-trainable params: 0

_________________________________________________________________

三.训练并保存模型

# [None, 784] * W + b -> [None, 300] W.shape [784, 300], b = [300]history = model.fit(x_train, y_train, epochs=10,

validation_data=(x_valid, y_valid))

save_model_name = './modelckpt/model.h5'

if not os.path.exists('modelckpt/'):

os.mkdir('modelckpt/')

model.save(save_model_name)Train on 55000 samples, validate on 5000 samples

Epoch 1/10

55000/55000 [==============================] - 3s 51us/sample - loss: 0.3054 - accuracy: 0.8848 - val_loss: 0.4510 - val_accuracy: 0.8548

Epoch 2/10

55000/55000 [==============================] - 3s 51us/sample - loss: 0.3016 - accuracy: 0.8869 - val_loss: 0.4398 - val_accuracy: 0.8610

Epoch 3/10

55000/55000 [==============================] - 3s 52us/sample - loss: 0.2980 - accuracy: 0.8877 - val_loss: 0.4571 - val_accuracy: 0.8568

Epoch 4/10

55000/55000 [==============================] - 3s 52us/sample - loss: 0.2938 - accuracy: 0.8902 - val_loss: 0.4521 - val_accuracy: 0.8582

Epoch 5/10

55000/55000 [==============================] - 3s 52us/sample - loss: 0.2913 - accuracy: 0.8909 - val_loss: 0.4274 - val_accuracy: 0.8624

Epoch 6/10

55000/55000 [==============================] - 3s 50us/sample - loss: 0.2898 - accuracy: 0.8914 - val_loss: 0.4413 - val_accuracy: 0.8588

Epoch 7/10

55000/55000 [==============================] - 3s 51us/sample - loss: 0.2853 - accuracy: 0.8920 - val_loss: 0.4350 - val_accuracy: 0.8636

Epoch 8/10

55000/55000 [==============================] - 3s 51us/sample - loss: 0.2821 - accuracy: 0.8936 - val_loss: 0.4405 - val_accuracy: 0.8596

Epoch 9/10

55000/55000 [==============================] - 3s 58us/sample - loss: 0.2805 - accuracy: 0.8932 - val_loss: 0.4581 - val_accuracy: 0.8474

Epoch 10/10

55000/55000 [==============================] - 3s 62us/sample - loss: 0.2784 - accuracy: 0.8958 - val_loss: 0.4382 - val_accuracy: 0.8652

type(history)tensorflow.python.keras.callbacks.History

history.history{'loss': [0.305442014449293,

0.3016150927500291,

0.29796552203135057,

0.29384967036897486,

0.2912601308887655,

0.28980197758457876,

0.28531043367385867,

0.2821029525247487,

0.2804998582287268,

0.27841436649452556],

'accuracy': [0.8848182,

0.8868727,

0.88765454,

0.8902364,

0.89092726,

0.8913818,

0.89198184,

0.8936,

0.89323634,

0.89576364],

'val_loss': [0.4510028466701508,

0.43975458673238754,

0.4571208846330643,

0.4521390220403671,

0.4273876326799393,

0.4413052448749542,

0.43496818118691444,

0.44045424642562864,

0.4580733994960785,

0.43815696096420287],

'val_accuracy': [0.8548,

0.861,

0.8568,

0.8582,

0.8624,

0.8588,

0.8636,

0.8596,

0.8474,

0.8652]}

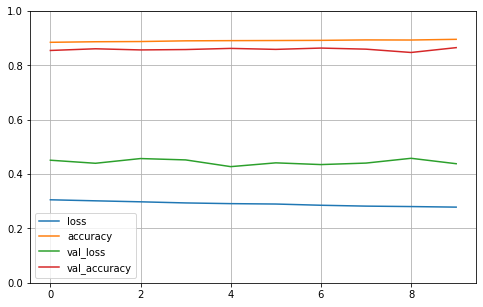

四.看下训练分布,验证分布

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

plot_learning_curves(history)

# model.evaluate

# 输入数据和标签,输出损失和精确度.

# # 评估模型,不输出预测结果

# loss,accuracy = model.evaluate(X_test,Y_test)

# print('\ntest loss',loss)

# print('accuracy',accuracy)

# model.predict

# 输入测试数据,输出预测结果

# (通常用在需要得到预测结果的时候)

# 模型预测,输入测试集,输出预测结果

# y_pred = model.predict(X_test,batch_size = 1)test_loss, test_acc = model.evaluate(x_test, y_test)

print("准确率: %.4f,共测试了%d张图片 " % (test_acc, len(x_test)))10000/10000 [==============================] - 0s 31us/sample - loss: 0.4674 - accuracy: 0.8567

准确率: 0.8567,共测试了10000张图片

准确率85.67%

本文于 2021-04-28 14:35 由作者进行过修改